There was a time when “AI” meant racks of GPUs humming away in a data center. Today, that assumption is outdated. The future of AI isn’t just in the cloud, it’s in tiny devices that fit in your hand, or even on your wrist. Embedded systems are becoming the frontlines of artificial intelligence, and the marriage of the two opens up new possibilities—from smart sensors to autonomous robots to predictive maintenance in factories

But how do you actually bring AI into embedded systems without overwhelming the limited resources of microcontrollers and edge processors? Let’s walk through the landscape.

Choosing the Right Hardware

The first question is: what kind of AI are you running? If your model is something lightweight—say, keyword spotting or anomaly detection—you can run it on a microcontroller like an STM32 or an ESP32 using TensorFlow Lite for Microcontrollers. These chips may only have a few hundred kilobytes of RAM, but that’s enough for small neural nets.

If your use case is more demanding—like image recognition or sensor fusion—you’ll want an embedded AI accelerator. Popular options include:

- NVIDIA Jetson family (Jetson Orin Nano, Xavier NX, Nano) for robotics and vision-heavy workloads.

- Google Coral boards with Edge TPU for ultra-efficient inference.

- NXP i.MX RT series or Renesas RA microcontrollers optimized for AI at low power.

The takeaway is to match your silicon to your workload. Don’t throw a Jetson at a problem that a Cortex-M4 can handle, but also don’t expect an 80 MHz MCU to do real-time video analysis.

The Tools and Frameworks That Make It Work

On the software side, a few ecosystems dominate:

- TensorFlow Lite Micro: Runs on bare-metal or RTOS-based MCUs with no OS overhead. Great for audio and sensor-based ML.

- PyTorch → ONNX → Edge Deployment: While PyTorch itself doesn’t target embedded, you can export to ONNX and convert models into formats for devices with more horsepower.

- Edge Impulse: This platform is a game-changer for developers who don’t want to reinvent the wheel. You can collect data, train models, optimize them, and deploy to a huge range of boards without leaving your browser.

- TinyML libraries: CMSIS-NN, uTensor, and microTVM are other tools worth exploring if you’re deep into optimization.

Workflow: From Model to Device

Here’s what a typical workflow looks like when bringing AI to the edge:

- Data collection: Gather sensor, audio, or image data from your target environment. Don’t underestimate this step—garbage in, garbage out.

- Training: Train your model on a PC or in the cloud, not on the embedded device itself. This is where frameworks like TensorFlow or PyTorch shine.

- Optimization: Quantize your model to 8-bit integers or even lower precision. Prune unnecessary layers. Embedded devices live on efficiency.

- Deployment: Flash the optimized model onto your device using frameworks like TensorFlow Lite Micro or Edge Impulse’s deployment SDKs.

- Test in the real world: Expect some surprises here—noise, jitter, and sensor quirks can throw off accuracy. Iterate until it holds up under real conditions.

The Challenges to Watch Out For

AI at the edge isn’t just about “shrinking” models. You’ll wrestle with:

- Memory constraints: An MCU with 256 KB RAM can’t run a ResNet.

- Power budgets: In battery-powered systems, inference can’t eat up milliwatts every second.

- Latency needs: A drone avoiding obstacles can’t afford cloud roundtrips.

- Security: Your AI model itself can be an asset worth protecting against reverse engineering.

Where to Learn and Experiment

If you’re new to this space, here are some go-to resources:

- tinyML Foundation: Community and conferences dedicated to AI on microcontrollers.

- Edge Impulse Studio: Easiest on-ramp to building and deploying models.

- NVIDIA Jetson tutorials: Tons of robotics and vision examples.

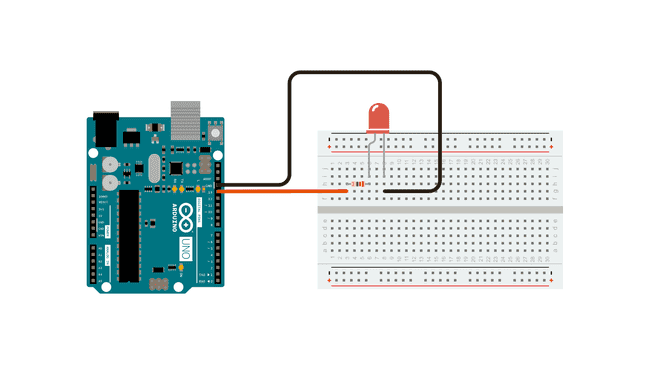

- Arduino ML kits: Beginner-friendly boards with TensorFlow Lite support.

What makes this field so exciting is that AI is no longer locked away in massive servers. With the right combination of silicon, tools, and workflows, you can make even the smallest devices “intelligent.” The next wave of innovation won’t just be about bigger models in the cloud, it’ll be about billions of tiny systems quietly running AI at the edge—smart, efficient, and everywhere.

Once you see a coin-sized board making sense of the world on its own, you realize this isn’t just a technical shift. It’s a paradigm shift. And it’s happening right now.