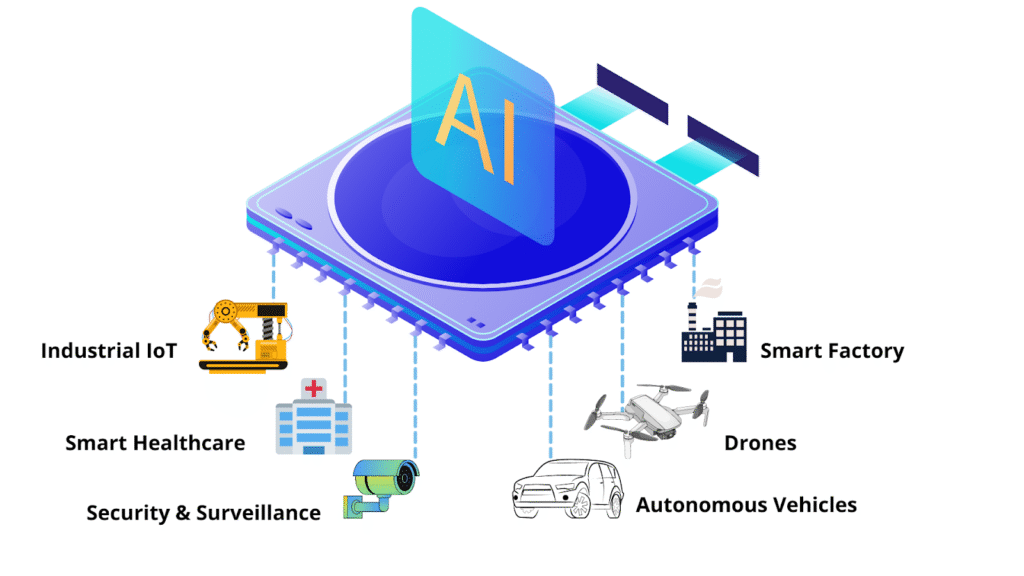

Artificial Intelligence is no longer limited to cloud servers and high-end workstations. Thanks to advancements in hardware and software, AI capabilities are increasingly being embedded directly into edge devices. From smart cameras to autonomous drones, integrating AI into embedded systems opens up powerful new possibilities — but it also introduces unique design considerations.

🤖 Why AI in Embedded Systems Matters

AI enables devices to process data locally, make intelligent decisions in real-time, and reduce reliance on constant network connections. This can dramatically improve performance in latency-sensitive applications like machine vision, predictive maintenance, and natural language processing.

🛠 Hardware Platforms for Embedded AI

- NVIDIA Jetson Orin Nano: A compact yet powerful module with an Ampere GPU architecture, ideal for AI workloads such as object detection and speech recognition. Supports frameworks like TensorFlow, PyTorch, and ONNX.

- Google Coral Dev Board: Equipped with an Edge TPU for efficient inference, particularly in low-power scenarios.

- Raspberry Pi + AI accelerators: Affordable prototyping option when paired with USB-based accelerators like Intel’s Neural Compute Stick.

- STM32 with TinyML: Low-power microcontrollers capable of running lightweight AI models for ultra-constrained environments.

⚙️ Software and Frameworks

- TensorFlow Lite and PyTorch Mobile: For deploying AI models on resource-limited hardware.

- NVIDIA JetPack SDK: Includes CUDA, cuDNN, and TensorRT for accelerated inference on Jetson platforms.

- Edge Impulse: Cloud-based platform to collect data, train, and deploy AI models to embedded hardware.

📏 Key Design Considerations

- Performance vs. Power Consumption: AI tasks like image classification can be compute-intensive. Choose hardware that meets your processing needs without draining power.

- Memory Limitations: Embedded devices have far less RAM than desktop systems. Optimize models via pruning, quantization, or knowledge distillation.

- Thermal Management: High-performance AI chips can generate significant heat. Design adequate cooling solutions to maintain stability.

- Data Privacy & Security: On-device inference enhances privacy by keeping sensitive data local, but ensure proper encryption and secure boot mechanisms.

🚀 Example Applications

- Autonomous Drones: Jetson Orin Nano running real-time object avoidance.

- Industrial IoT Predictive Maintenance: STM32 microcontroller with TinyML model monitoring vibrations.

- Smart Retail Analytics: Coral Dev Board counting foot traffic and analyzing shopper behavior.

💡 Tips for a Smooth AI Integration

- Start with pre-trained models and adapt them with transfer learning.

- Profile inference times early to avoid bottlenecks.

- Use model compression techniques to fit into embedded memory constraints.

- Leverage hardware-specific SDKs for maximum performance.

Summary Table:

| Platform | AI Strengths | Ideal Use Case |

| Jetson Orin Nano | High performance, GPU acceleration | Real-time vision & robotics |

| Coral Dev Board | Low power, TPU acceleration | IoT analytics & sensor fusion |

| Raspberry Pi + Accelerator | Flexible, low-cost prototyping | DIY AI devices |

| STM32 + TinyML | Ultra-low power | Edge sensor intelligence |